AWS 成本

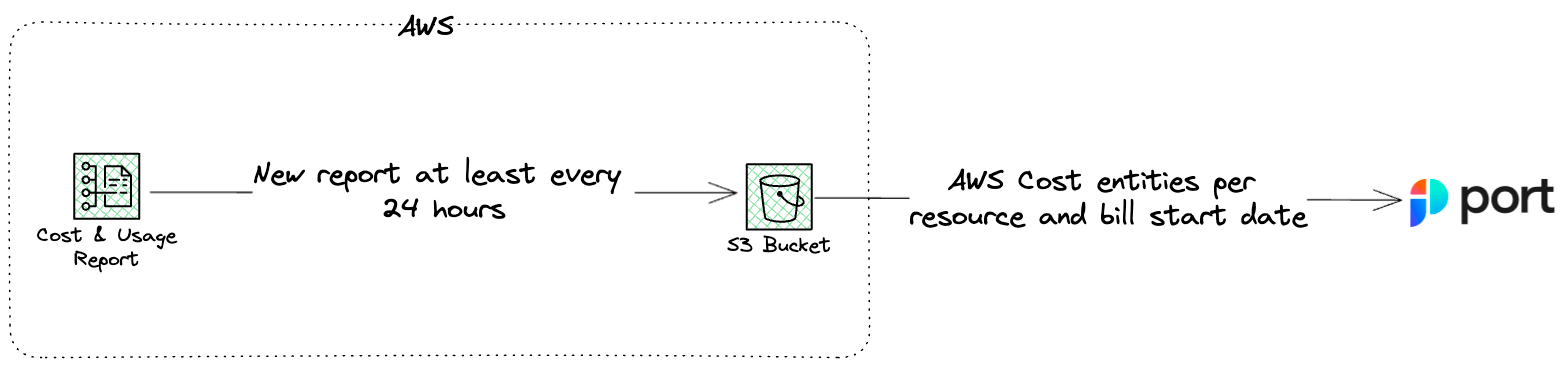

通过 AWS 成本集成,您可以将 "AWS 成本 "报告导入 Port。

Port 中的每个实体代表每张账单中单个资源的成本详情。

根据您的配置,实体将在 Port 中保存几个月(默认为 3 个月)。

安装

- AWS Setup - 需要。

- Port Setup - 需要。

- Exporter Setup - 请选择其中一个选项: -Local -Docker -GitHub Workflow -GitLab Pipeline

AWS

- Create an AWS S3 Bucket 用于托管成本报告(将

<AWS_BUCKET_NAME>,<AWS_REGION>替换为您想要的存储桶名称和 AWS 区域).

aws s3api create-bucket --bucket <AWS_BUCKET_NAME> --region <AWS_REGION>

2.在本地创建一个名为 policy.json 的文件。将以下content 复制并粘贴到该文件中并保存,确保用第一步中创建的水桶名称和 AWS 账户 ID 更新 <AWS_BUCKET_NAME> 和 <AWS_ACCOUNT_ID>。

policy.json

{

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "billingreports.amazonaws.com"

},

"Action": ["s3:GetBucketAcl", "s3:GetBucketPolicy"],

"Resource": "arn:aws:s3:::<AWS_BUCKET_NAME>",

"Condition": {

"StringEquals": {

"aws:SourceArn": "arn:aws:cur:us-east-1:<AWS_ACCOUNT_ID>:definition/*",

"aws:SourceAccount": "<AWS_ACCOUNT_ID>"

}

}

},

{

"Sid": "Stmt1335892526596",

"Effect": "Allow",

"Principal": {

"Service": "billingreports.amazonaws.com"

},

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::<AWS_BUCKET_NAME>/*",

"Condition": {

"StringEquals": {

"aws:SourceArn": "arn:aws:cur:us-east-1:<AWS_ACCOUNT_ID>:definition/*",

"aws:SourceAccount": "<AWS_ACCOUNT_ID>"

}

}

}

]

}

3.将您在第二步中创建的bucket policy 添加到您在第一步中创建的存储桶中(以下命令假定 policy.json 位于您当前的工作目录中,如果保存在其他地方,则应更新文件的正确位置) 。此策略将允许 AWS 将成本和使用报告 (CUR) (将 <AWS_BUCKET_NAME> 替换为您在第一步中创建的存储桶名称) 写入您的存储桶:

aws s3api put-bucket-policy --bucket <AWS_BUCKET_NAME> --policy file://policy.json

4.在本地创建一个名为 report-definition.json的文件。将以下推荐内容复制并粘贴到该文件中并保存,确保用第一步中创建的水桶名称和目标 AWS 区域更新 <AWS_BUCKET_NAME> 和 <AWS_REGION>。

report-definition.json

{

"ReportName": "aws-monthly-cost-report-for-port",

"TimeUnit": "MONTHLY",

"Format": "textORcsv",

"Compression": "GZIP",

"AdditionalSchemaElements": ["RESOURCES"],

"S3Bucket": "<AWS_BUCKET_NAME>",

"S3Prefix": "cost-reports",

"S3Region": "<AWS_REGION>",

"RefreshClosedReports": true,

"ReportVersioning": "OVERWRITE_REPORT"

}

- Create an AWS Cost and Usage Report 用于每日生成成本报告,并保存在报告桶中(以下命令假定

report-definition.json位于当前工作目录中,如果保存在其他目录中,则应更新文件的正确位置).

aws cur put-report-definition --report-definition file://report-definition.json

6.最多等待 24 小时,直到生成第一份报告。运行以下 AWS CLI 命令,检查是否已创建 CUR 并将其添加到您的存储桶,确保用您在第一步中创建的存储桶名称更新以下命令中的 AWS_BUCKET_NAME:

aws s3 ls s3://AWS_BUCKET_NAME/cost-reports/aws-monthly-cost-report-for-port/

如果上述命令至少返回一个以 CUR 创建次日的日期范围命名的目录,则报告已准备就绪,可以输入 Port。

Port

- 创建

awsCost蓝图(下面的蓝图是一个示例,可根据需要进行修改):

AWS Cost Blueprint

{

"identifier": "awsCost",

"title": "AWS Cost",

"icon": "AWS",

"schema": {

"properties": {

"unblendedCost": {

"title": "Unblended Cost",

"type": "number",

"description": "Represent your usage costs on the day they are charged to you. It’s the default option for analyzing costs."

},

"blendedCost": {

"title": "Blended Cost",

"type": "number",

"description": "Calculated by multiplying each account’s service usage against a blended rate. This cost is not used frequently due to the way that it calculated."

},

"amortizedCost": {

"title": "Amortized Cost",

"type": "number",

"description": "View recurring and upfront costs distributed evenly across the months, and not when they were charged. Especially useful when using AWS Reservations or Savings Plans."

},

"ondemandCost": {

"title": "On-Demand Cost",

"type": "number",

"description": "The total cost for the line item based on public On-Demand Instance rates."

},

"payingAccount": {

"title": "Paying Account",

"type": "string"

},

"usageAccount": {

"title": "Usage Account",

"type": "string"

},

"product": {

"title": "Product",

"type": "string"

},

"billStartDate": {

"title": "Bill Start Date",

"type": "string",

"format": "date-time"

}

},

"required": []

},

"mirrorProperties": {},

"calculationProperties": {

"link": {

"title": "Link",

"calculation": "if (.identifier | startswith(\"arn:\")) then \"https://console.aws.amazon.com/go/view?arn=\" + (.identifier | split(\"@\")[0]) else null end",

"type": "string",

"format": "url"

}

},

"relations": {}

}

出口商

所有设置选项的输出器环境变量:

| Env Var | Description | Required | Default |

|---|---|---|---|

| PORT_CLIENT_ID | Your Port client id | true | |

| PORT_CLIENT_SECRET | Your Port client secret | true | |

| PORT_BASE_URL | Port API base url | false | https://api.getport.io/v1 |

| PORT_BLUEPRINT | Port blueprint identifier to use | false | awsCost |

| PORT_MAX_WORKERS | Max concurrent threads that interacts with Port API (upsert/delete entities) | false | 5 |

| PORT_MONTHS_TO_KEEP | Amount of months to keep the cost reports in Port | false | 3 |

| AWS_BUCKET_NAME | Your AWS bucket name to store cost reports | true | |

| AWS_COST_REPORT_S3_PATH_PREFIX | Your AWS cost report S3 path prefix | false | cost-reports/aws-monthly-cost-report-for-port |

本地

- 确保已安装 Python,并确保 Python 版本至少为 Python 3.11:

python3 --version

2.根据你首选的克隆方法(下面的示例被用于了 SSH 克隆方法)克隆port-aws-cost-exporter 版本库 ,然后将你的工作目录切换到该克隆版本库。

git clone [email protected]:port-labs/port-aws-cost-exporter.git

cd port-aws-cost-exporter

3.创建新的虚拟环��境并安装需求

python3 -m venv venv

source venv/bin/activate

pip3 install -r requirements.txt

4.设置所需的环境变量并运行输出程序

export PORT_CLIENT_ID=<PORT_CLIENT_ID>

export PORT_CLIENT_SECRET=<PORT_CLIENT_SECRET>

export AWS_BUCKET_NAME=<AWS_BUCKET_NAME>

export AWS_ACCESS_KEY_ID=<AWS_ACCESS_KEY_ID>

export AWS_SECRET_ACCESS_KEY=<AWS_SECRET_ACCESS_KEY>

python3 main.py

docker

- 创建包含所需环境变量的

.env文件

PORT_CLIENT_ID=<PORT_CLIENT_ID>

PORT_CLIENT_SECRET=<PORT_CLIENT_SECRET>

AWS_BUCKET_NAME=<AWS_BUCKET_NAME>

AWS_ACCESS_KEY_ID=<AWS_ACCESS_KEY_ID>

AWS_SECRET_ACCESS_KEY=<AWS_SECRET_ACCESS_KEY>

2.使用 .env 运行 docker 的 Docker 镜像

docker run -d --name getport.io-port-aws-cost-exporter --env-file .env ghcr.io/port-labs/port-aws-cost-exporter:latest

3.查看容器的 logging,观察进度:

docker logs -f getport.io-port-aws-cost-exporter

GitHub 工作流程

- 创建以下 GitHub 仓库 secrets:

需要:

- aws_access_key_id`

- 访问密钥

- Port客户 ID

- Port客户机密钥

排期所需:

- aws_bucket_name`

2.被用于的 GitHub 工作流定义允许按计划或手动运行导出器:

GitHub Workflow run.yml

name: portAwsCostExporter

on:

schedule:

- cron: "0 0 * * *" # At 00:00 on every day

workflow_dispatch:

inputs:

AWS_BUCKET_NAME:

description: "The AWS Bucket name of the cost reports"

type: string

required: true

jobs:

run:

runs-on: ubuntu-latest

steps:

- name: run

uses: docker://ghcr.io/port-labs/port-aws-cost-exporter:latest

env:

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

AWS_BUCKET_NAME: ${{ inputs.AWS_BUCKET_NAME || secrets.AWS_BUCKET_NAME }}

PORT_CLIENT_ID: ${{ secrets.PORT_CLIENT_ID }}

PORT_CLIENT_SECRET: ${{ secrets.PORT_CLIENT_SECRET }}

GitLab Pipeline

- 创建以下 GitLab CI/CD 变量:

需要:

- aws_access_key_id`

- aws_access_key_id`

aws_bucket_name(服务器名称- Port客户 ID

port_client_secretPort客户密钥

2.被用于的 GitLab CI Pipeline 定义允许按计划或手动运行输出程序:

GitLab Pipeline gitlab-ci.yml

image: docker:latest

services:

- docker:dind

variables:

PORT_CLIENT_ID: $PORT_CLIENT_ID

PORT_CLIENT_SECRET: $PORT_CLIENT_SECRET

AWS_ACCESS_KEY_ID: $AWS_ACCESS_KEY_ID

AWS_SECRET_ACCESS_KEY: $AWS_SECRET_ACCESS_KEY

AWS_BUCKET_NAME: $AWS_BUCKET_NAME

stages:

- run

run_job:

stage: run

script:

- docker run -e AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY_ID -e AWS_SECRET_ACCESS_KEY=$AWS_SECRET_ACCESS_KEY -e AWS_BUCKET_NAME=$AWS_BUCKET_NAME -e PORT_CLIENT_ID=$PORT_CLIENT_ID -e PORT_CLIENT_SECRET=$PORT_CLIENT_SECRET ghcr.io/port-labs/port-aws-cost-exporter:latest

rules:

- if: '$CI_PIPELINE_SOURCE == "schedule"'

when: always

3.安排脚本时间: 1.进入 GitLab 仓库,从侧边栏菜单中选择构建。 2.点击Pipelines schedules,然后点击New Schedule。 3.在表单中填写计划详情: 描述、间隔模式、时区、目标分支。 4.确保选中已激活复选框。 5.单击 ** 创建 Pipelines 计划表** 创建计划表。 提示 建议将 Pipelines 计划为每天最多运行一次,因为 AWS 每天刷新一次数据。 :::